Biography

Hi, I am Junwei Li, a researcher at The Hong Kong University of Science and Technology (Guangzhou) (HKUST(GZ)). I earned my Master of Philosophy (M.Phil.) degree in Data Science and Analytics from HKUST(GZ) in 2025, supervised by Prof. Jing TANG and Prof. Lei CHEN. My research interests include Multi-Agent LLM Systems, Retrieval-Augmented Generation with KV-cache reuse, and compliance-aware, privacy-preserving AI companions for cloud–edge deployment in education and assistive use. Besides, I serve as the President of the Entrepreneurship Association at HKUST(GZ).

Recent News

- Oct 20 — I will attend ICHEC 2025 @ Singapore and present a full paper.

- Oct 2 — I was invited to serve as a reviewer for CHI 2026.

- Jun 6 — One paper on real-time conversational digital humans (Hi-Reco) was accepted at CGI 2025!

Attending

- Nov 21 - Nov 23 — ICHEC 2025 @ Singapore.

- Jul 14 - Jul 18 — CGI 2025 @ Kowloon, Hong Kong.

- Apr 08 - Apr 14 — 50'th Inventions-Geneva @ Geneva, Switzerland.

- Multi-Agent LLM Systems

- RAG/Graph-RAG

- Educational Technology

- Affective Computing

- Human–Computer Interaction

-

MPhil in Data Science and Analytics, 2025

The Hong Kong University of Science and Technology (Guangzhou)

-

BSc in E-commerce with Business Analytics, 2021

Beijing Normal University, Zhuhai

🏆 Selected Honors

Our team won the National Champion at the First L’Oréal Beauty Tech Hackathon in China. Grateful for the chance to compete in the China Grand Final of L’Oréal’s inaugural Beauty Tech Hackathon!

Won the Gold Medal at the 50th Geneva International Exhibition of Inventions, Switzerland. As a member of Jacobi.ai (an HKUST(GZ) spin-off), I joined the team that won a Gold Medal at the 50th International Exhibition of Inventions Geneva 2025.

News

-

2025One paper on a Multi-Agent System framework for Beauty Tech was accepted to ICHEC 2025. Oct 20

-

I was invited to serve as a reviewer for ICHEC 2025. Oct 04

-

I was invited to serve as a reviewer for CHI 2026. Oct 02

-

I Earned M.Phil. degree in Data Science and Analytics Jul 30

-

We won the Champion of the 2025 “Win in Jiangyin” Global Innovation and Entrepreneurship Competition for Overseas Talents Jul 10

-

We won HKUST(GZ) RedBird Outstanding RBM Project Award May 01

-

2024We won Excellence Award in GBA Postgraduate Innovation and Entrepreneurship Competition Dec 01

-

We won Excellence Award in HKUST Million-Dollar Competition Oct 01

-

We won Second Prize in Baidu PaddlePaddle AI Competition Aug 01

Experience

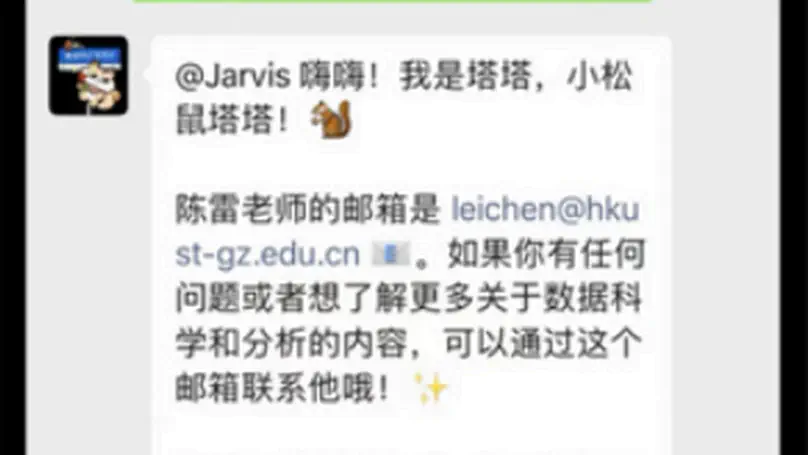

The Lab of Future Technology is at HKUST(GZ) within the College of Future Technology (CFT). The group is led by Prof.Jingshen Wu.

As the Entrepreneurial Project Owner and Product Manager, I am leading the Aicorumi and TATA AI projects, AI agent system incubated within the prestigious Bridge Program. Our projects have been awarded ¥600,000 in non-equity funding to accelerate development and productization.

My core responsibilities and contributions include:

- I spearhead the architectural design of our multi-agent systems and the fine-tuning of our Large Language Models.

- I also drive the feature development for the Model Context Protocol (MCP) to align with our project’s vision.

- Furthermore, I define the product strategy and strategic roadmap, translating complex AI capabilities into market-ready products.

The Metaverse Joint Innovation Laboratory is at HKUST(GZ) within the Data Science and Analytics Thrust (DSA Thrust). The group is led by Prof.Lei Chen.

My responsibilities include:

- Research on various projects related to LLMs (specifically focusing on Multi-Agent Systems)

- Manage various affairs of the lab as a core member.

As the AI Product Manager, I owned the lifecycle for a proprietary Conversational AI solution focused on the healthcare sector, from v1.0 to v4.0. I successfully drove its 0-to-1 launch in a live hospital setting, acquiring over 1,000 seed users and establishing a critical proof-of-concept.

My core responsibilities and contributions include:

- I defined the product’s technical specifications, including key model evaluation metrics and data annotation guidelines.

- I managed the creation of a high-quality, domain-specific dataset, sourced directly from on-site feedback sessions with doctors, nurses, patients, and their families, which was instrumental in mitigating model hallucinations.

- I helped shape the AI engine’s technology roadmap, ensuring its architecture was optimized for both accuracy and inference speed.

As a Product Operator at JD Retail, I was deeply involved in both driving business growth and shaping the underlying product & technology. By building data dashboards and analyzing user behavior funnels, I provided data-driven growth strategies for a portfolio of 60+ flagship stores, including top-tier lifestyle service brands, consistently achieving over ¥10 million in monthly GMV.

My core responsibilities and contributions include:

- I acted as the technical product liaison for our B2B enterprise services, defining API requirements and coordinating integration testing with 9 major chain brands.

- I played a key role in the product feature planning for a JD.com S-tier omni-channel marketing campaign, driving the implementation of key “Unbounded Retail” features like LBS-based recommendations.

- I identified and translated merchant pain points into actionable product optimization requirements for our backend systems, successfully enhancing partner operational efficiency.

Projects

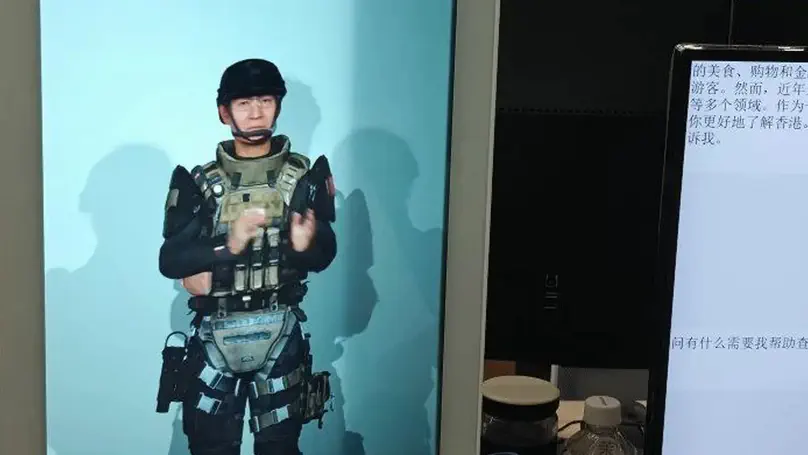

A high-fidelity, real-time conversational digital human that combines a photorealistic 3D avatar, persona-driven expressive TTS, and knowledge-grounded dialogue, coordinated by an asynchronous low-latency pipeline with history-augmented retrieval and intent-based routing, supervised by Prof. Zeyu Wang.

Featured Publications

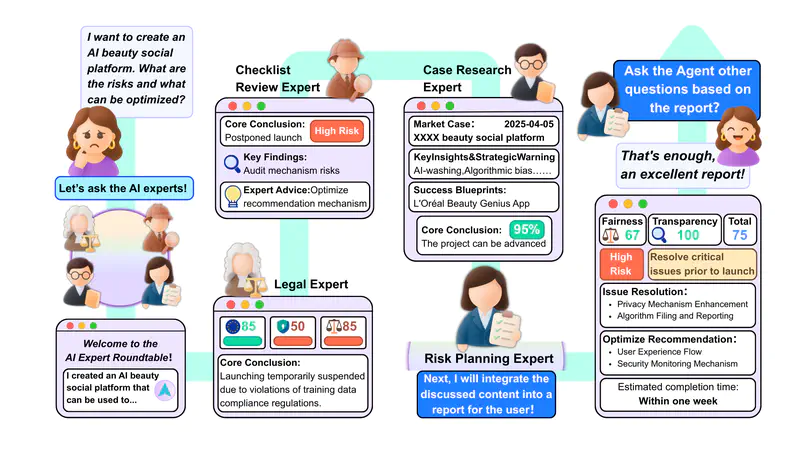

As generative AI enters enterprise workflows, ensuring compliance with legal, ethical, and reputational standards becomes a pressing challenge. In beauty tech, where biometric and personal data are central, traditional reviews are often manual, fragmented, and reactive. To examine these challenges, we conducted a formative study with six experts (four IT managers, two legal managers) at a multinational beauty company. The study revealed pain points in rule checking, precedent use, and the lack of proactive guidance.Motivated by these findings, we designed a multi-agent “roundtable” system powered by a large language model. The system assigns role-specialized agents for legal interpretation, checklist review, precedent search, and risk mitigation, synthesizing their perspectives into structured compliance advice.We evaluated the prototype with the same experts using System Usability Scale(SUS), The Official NASA Task Load Index(NASA-TLX), and interviews. Results show exceptional usability (SUS:77.5/100) and minimal cognitive workload, with three key findings:(1) multi-agent systems can preserve tacit knowledge into standardized workflows, (2) information augmentation achieves higher acceptance than decision automation, and (3) successful enterprise AI should mirror organizational structures. This work contributes design principles for human-AI collaboration in compliance review, with broader implications for regulated industries beyond beauty tech.

High-fidelity digital humans are increasingly used in interactive applications, yet achieving both visual realism and real-time responsiveness remains a major challenge. We present a high-fidelity, real-time conversational digital human system that seamlessly combines a visually realistic 3D avatar, persona-driven expressive speech synthesis, and knowledge-grounded dialogue generation. To support natural and timely interaction, we introduce an asynchronous execution pipeline that coordinates multi-modal components with minimal latency. The system supports advanced features such as wake word detection, emotionally expressive prosody, and highly accurate, context-aware response generation. It leverages novel retrieval-augmented methods, including history augmentation to maintain conversational flow and intent-based routing for efficient knowledge access. Together, these components form an integrated system that enables responsive and believable digital humans, suitable for immersive applications in communication, education, and entertainment.

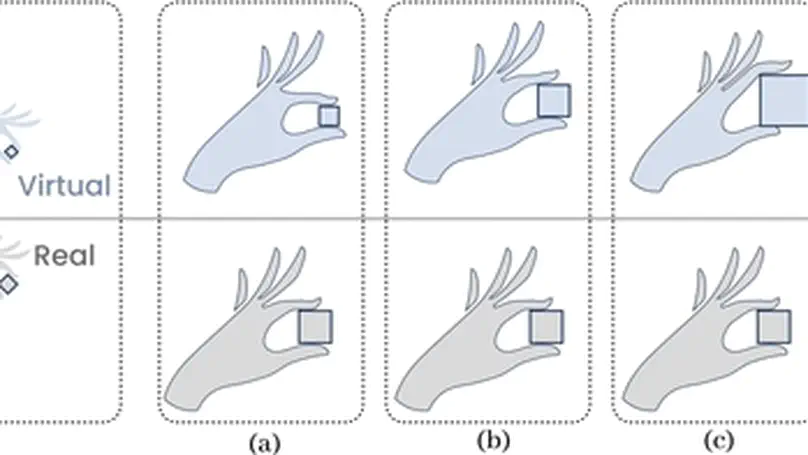

Sandplay is an effective psychotherapy for mental retreatment, and many people prefer to engage in sandplay in Virtual Reality (VR) due to its convenience. Haptic perception of physical objects and miniatures enhances the realism and immersion in VR. Previous studies have rendered sizes by exerting pressure on the user’s fingertips or employing tangible, shape-changing devices. However, these interfaces are limited by the physical shapes they can assume, making it difficult to simulate objects that grow larger or smaller than the interface. Motivated by literature on visual-haptic illusions, this work aims to convey the haptic sensation of a virtual object’s shape to the user by exploring the relationships between the haptic feedback from real objects and their visual renderings in VR. Our study focuses on the confirmation and adjustment ratios for different virtual object sizes. The results show that the likelihood of participants confirming the correct size of virtual cubes decreases as the object size increases, requiring more adjustments for larger objects. This research provides valuable insights into the relationships between haptic sensations and visual inputs, contributing to the understanding of visual-haptic illusions in VR environments.

Recent Publications

Contact

- jli801@connect.hkust-gz.edu.cn

- Duxue Road No.1, Nansha District, Guangzhou, 510000

Visitors

Powered by ClustrMaps